Difference between revisions of "Personalized Problems"

Sfrancisco (talk | contribs) (Updated pattern according to writing workshop comments from PLoP 2015.) |

Sfrancisco (talk | contribs) m (Edited format) |

||

| (14 intermediate revisions by 2 users not shown) | |||

| Line 4: | Line 4: | ||

{{Infobox_designpattern | {{Infobox_designpattern | ||

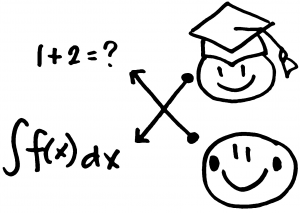

|image=Personalized_problems.png | |image=Personalized_problems.png | ||

|contributor=[[ | |contributor=[[Paul Salvador Inventado]], [[Peter Scupelli]] | ||

|source= Inventado and Scupelli (in press 2015)<ref name="Inventadoip">Inventado, P.S. & Scupelli, P. (in press 2015). [https://cmu.box.com/shared/static/m6qfs01z71gt38a7tf85gcgl8t84iw50.pdf A Data-driven Methodology for Producing Online Learning System Design Patterns]. In ''Proceedings of the 22nd Conference on Pattern Languages of Programs (PLoP 2015)''. New York:ACM.</ref> | |||

|dataanalysis=[[Analysis:Student_affect_and_interaction_behavior_in_ASSISTments#skillleve|Student affect and interaction behavior in ASSISTments]] | |dataanalysis=[[Analysis:Student_affect_and_interaction_behavior_in_ASSISTments#skillleve|Student affect and interaction behavior in ASSISTments]] | ||

|domain= General | |domain= General | ||

| Line 13: | Line 14: | ||

}} | }} | ||

Assign appropriate problem-solving activities to a student’s skill level | Assign appropriate problem-solving activities to a student’s skill level<ref name="Inventadoip"/> | ||

==Context== | ==Context== | ||

| Line 22: | Line 23: | ||

==Forces== | ==Forces== | ||

#'''Prior knowledge.''' Students cannot solve a problem if they lack the necessary skills<ref name="Sweller2004">Sweller, J. (2004). [http://link.springer.com/article/10.1023%2FB%3ATRUC.0000021808.72598.4d Instructional design consequences of an analogy between evolution by natural selection and human cognitive architecture]. Instructional science, 32(1-2), 9-31.</ref>. | #'''Prior knowledge.''' Students cannot solve a problem if they lack the necessary skills<ref name="Sweller2004">Sweller, J. (2004). [http://link.springer.com/article/10.1023%2FB%3ATRUC.0000021808.72598.4d Instructional design consequences of an analogy between evolution by natural selection and human cognitive architecture]. ''Instructional science, 32''(1-2), 9-31.</ref>. | ||

#'''Desirable difficulty.''' Activities that are too easy or do not challenge learners’ understanding of a concept require less mental processing and often, result in less | #'''Desirable difficulty.''' Activities that are too easy or do not challenge learners’ understanding of a concept require less mental processing and often, result in less learning<ref>Bjork, R.A. (1994). [http://psycnet.apa.org/psycinfo/1994-97967-009 Memory and metamemory considerations in the training of human beings]. In J. Metcalfe and A. Shimamura (Eds.), ''Metacognition: Knowing about knowing''. (pp.185-205). Cambridge, MA: MIT Press.</ref><ref>Piaget, J. (1952).The origins of intelligence. New York: International University Press.</ref>. | ||

#'''Learning rate.''' Student learning rates vary because of differences in prior knowledge, learning experiences, and quality of instruction received<ref name="Bloom1974">Bloom, B. S. (1974). [http://psycnet.apa.org/journals/amp/29/9/682/ Time and learning]. ''American psychologist, 29''(9), 682.</ref>. | |||

#'''Persistence.''' Students may disengage from a learning activity if they get stuck too long while trying to solve it<ref>Arnold, A., Scheines, R., Beck, J. E., and Jerome, B. (2005). [https://oli.cmu.edu/wp-oli/wp-content/uploads/2012/05/Arnold_2005_Time_and_Attention.pdf Time and attention: Students, sessions, and tasks]. In ''Proceedings of the AAAI 2005 Workshop Educational Data Mining'' (pp. 62-66).</ref><ref>D’Mello, S., and Graesser, A. (2012). [http://www.sciencedirect.com/science/article/pii/S0959475211000806 Dynamics of affective states during complex learning]. ''Learning and Instruction, 22''(2), 145-157.</ref>. | |||

#'''Learning rate.''' | |||

#''' | |||

==Solution== | ==Solution== | ||

Therefore, assign | Therefore, assign problems that are appropriate for a student’s skill level. A student’s capability to solve a problem can be identified using assessments of their knowledge on pre-requisite skills, or model-based predictors<ref name="Koedinger2007">Koedinger, K. R., and Aleven, V. (2007). [http://link.springer.com/article/10.1007/s10648-007-9049-0 Exploring the assistance dilemma in experiments with cognitive tutors]. ''Educational Psychology Review, 19''(3), 239-264.</ref>. | ||

==Consequences== | ==Consequences== | ||

===Benefits=== | ===Benefits=== | ||

#Students | #Students are asked to answer problems that they are capable of solving themselves, or with some assistance<ref>Vygotsky, L. S. (1962). ''Language and thought''. Massachusetts Institute of Technology Press, Ontario, Canada.</ref>. | ||

#The problem challenges students because it requires skills that students may not have mastered yet. | |||

# | #Each student is assigned to a different problem that is appropriate for his/her skill level. | ||

# | #Students may continue to solve a challenging problem if they have enough prerequisite knowledge. | ||

# | |||

===Liabilities=== | ===Liabilities=== | ||

#Content writers will need to provide content for | #Content writers will need to provide different content for each skill level. | ||

#The system needs to keep track of pre-requisite and post-requisite skills, as well as problems associated with those skills so they can be assigned appropriately. | |||

#The system needs to be capable of measuring students’ skill level and selecting problems dynamically. | |||

#If students’ skill level is incorrectly identified, the system can still give students problems that are too easy or too difficult. | #If students’ skill level is incorrectly identified, the system can still give students problems that are too easy or too difficult. | ||

| Line 48: | Line 49: | ||

===Literature=== | ===Literature=== | ||

Research | Research showed that personalizing content according to students’ skill level resulted in similar learning gains as non-personalized content, but took a shorter amount of time. This was observed in various domains such as simulated air traffic control,<ref>Salden, R. J., Paas, F., Broers, N. J., and Van Merriënboer, J. J. (2004) | ||

. [http://link.springer.com/article/10.1023/B:TRUC.0000021814.03996.ff Mental effort and performance as determinants for the dynamic selection of learning tasks in air traffic control training]. ''Instructional science, 32''(1-2), 153-172.</ref>, Algebra<ref>Cen, H., Koedinger, K. R., and Junker, B. (2007). [http://dl.acm.org/citation.cfm?id=1563681 Is Over Practice Necessary?-Improving Learning Efficiency with the Cognitive Tutor through Educational Data Mining]. ''Frontiers in Artificial Intelligence and Applications, 158'', 511.</ref>, Geometry<ref>Salden, R.J.C.M., Aleven, V., Schwonke, R. and Renkl, A. (2010). [http://link.springer.com/article/10.1007/s11251-009-9107-8 The expertise reversal effect and worked examples in tutored problem solving]. ''Instructional Sicience, 38'', 289--307.</ref>, and health sciences<ref>Corbalan, G., Kester, L. and van Merrieonboer, J.J.G. (2008). [http://www.sciencedirect.com/science/article/pii/S0361476X08000118 Selecting learning tasks: Effects of adaptation and shared control on learning efficiency and task involvement]. ''Contemporary Educational Psycholoy, 33'', 733--756.</ref>. | |||

===Discussion=== | ===Discussion=== | ||

Shepherds, writing workshop participants, and learning system stakeholders (i.e., data mining experts, learning scientists, and educators) agreed that the design pattern’s solution could address the identified problem. | |||

===Data=== | ===Data=== | ||

According to an [[Analysis:Student_affect_and_interaction_behavior_in_ASSISTments#skilllevel | | According to an [[Analysis:Student_affect_and_interaction_behavior_in_ASSISTments#skilllevel | ASSISTments math online learning system data]], boredom and gaming behavior correlated with problem difficulty (i.e., evidenced by answer correctness and number of hint requests). | ||

<!--===Applied evaluation=== | <!--===Applied evaluation=== | ||

Results from randomized controlled trials (RCTs) or similar tests that measures the pattern's effectiveness in an actual application. For example, compare student learning gains in an online learning system with and without applying the pattern. --> | Results from randomized controlled trials (RCTs) or similar tests that measures the pattern's effectiveness in an actual application. For example, compare student learning gains in an online learning system with and without applying the pattern. --> | ||

==Example== | |||

Many online learning systems were designed to adapt to students’ skill level. For example, SQL-Tutor provides students with problems on SQL programming that are appropriate to their level of knowledge<ref>Mitrovic, A., and Martin, B. (2004). [http://link.springer.com/chapter/10.1007/978-3-540-27780-4_22 Evaluating adaptive problem selection]. In ''Adaptive hypermedia and adaptive web-based systems'' (pp. 185-194). Springer Berlin Heidelberg.</ref>. [[Cognitive_tutor_algebra | Cognitive Tutor Algebra]] is another learning system that tracks student mastery on a particular knowledge component and provides them with algebra problems that are appropriate to their skill level<ref name="Koedinger2007"/>. The [[ASSISTments]] online learning system provides an IF-THEN-ELSE functionality that allows teachers to control the problem sets assigned to students based on their performance<ref>Donnelly, C.J. (2015). [https://www.wpi.edu/Pubs/ETD/Available/etd-042315-135723/unrestricted/cdonnelly_thesis.pdf Enhancing Personalization Within ASSISTments (Doctoral dissertation)].</ref>. This can be used to identify students’ skill level and to assign the appropriate problem set. | |||

A concrete example for applying this pattern is a teacher that encodes multiple math problem sets with varying levels of difficulty into an online learning system (e.g., single-digit subtraction, multiple-digit subtraction, subtraction by regrouping). As students answer questions in their homework, the online learning system would keep track of students’ progress to identify their skill level such as low (i.e., student makes mistakes ≥ 60% of the time), medium (i.e., student makes mistakes < 60% and ≥ 40% of the time) or high (i.e., student makes mistakes < 40% of the time). Based on students’ performance, the online learning system would provide the appropriate problem set so that it is more likely for students to receive questions that are fit for their skill level. | |||

==Related patterns== | ==Related patterns== | ||

The {{Patternlink|Personalized Problems}} design pattern is an implementation of the {{Patternlink|Different Exercise Levels}} design pattern in online learning systems. The system should use the {{Patternlink|Just Enough Practice}} design pattern so that students reach mastery before switching to a more challenging problem set. The {{Patternlink|Differentiated Feedback}} or {{Patternlink|Worked examples}} design patterns may be used to facilitate learning. | |||

==References== | ==References== | ||

<references/> | <references/> | ||

[[Category:Design_patterns]] [[Category:ASSISTments]] | ==External Links== | ||

*[http://assistments.org ASSISTments] | |||

* [http://www.carnegielearning.com/learning-solutions/software/cognitive-tutor/ Cognitive Tutor Software] | |||

[[Category:Design_patterns]] [[Category:ASSISTments]] [[Category:Full_Pattern]] [[Category:Pattern Language for Math problems and Learning Support in Online Learning Systems]] [[Category:Online Learning System]] [[Category:Intelligent Tutoring System]] | |||

Latest revision as of 10:18, 6 June 2017

| Personalized Problems | |

| Contributors | Paul Salvador Inventado, Peter Scupelli |

|---|---|

| Last modification | June 6, 2017 |

| Source | Inventado and Scupelli (in press 2015)[1] |

| Pattern formats | OPR Alexandrian |

| Usability | |

| Learning domain | General |

| Stakeholders | Teachers, Students, System developers |

| Production | |

| Data analysis | Student affect and interaction behavior in ASSISTments |

| Confidence | |

| Evaluation | PLoP 2015 shepherding and writing workshop Talk:ASSISTments |

| Application | ASSISTments |

| Applied evaluation | ASSISTments |

Assign appropriate problem-solving activities to a student’s skill level[1]

Context

Content creators design problems for students to solve to better understand the concepts taught.

Problem

Students become bored or disengage from an activity if they are asked to solve problems that are either too easy or too difficult.

Forces

- Prior knowledge. Students cannot solve a problem if they lack the necessary skills[2].

- Desirable difficulty. Activities that are too easy or do not challenge learners’ understanding of a concept require less mental processing and often, result in less learning[3][4].

- Learning rate. Student learning rates vary because of differences in prior knowledge, learning experiences, and quality of instruction received[5].

- Persistence. Students may disengage from a learning activity if they get stuck too long while trying to solve it[6][7].

Solution

Therefore, assign problems that are appropriate for a student’s skill level. A student’s capability to solve a problem can be identified using assessments of their knowledge on pre-requisite skills, or model-based predictors[8].

Consequences

Benefits

- Students are asked to answer problems that they are capable of solving themselves, or with some assistance[9].

- The problem challenges students because it requires skills that students may not have mastered yet.

- Each student is assigned to a different problem that is appropriate for his/her skill level.

- Students may continue to solve a challenging problem if they have enough prerequisite knowledge.

Liabilities

- Content writers will need to provide different content for each skill level.

- The system needs to keep track of pre-requisite and post-requisite skills, as well as problems associated with those skills so they can be assigned appropriately.

- The system needs to be capable of measuring students’ skill level and selecting problems dynamically.

- If students’ skill level is incorrectly identified, the system can still give students problems that are too easy or too difficult.

Evidence

Literature

Research showed that personalizing content according to students’ skill level resulted in similar learning gains as non-personalized content, but took a shorter amount of time. This was observed in various domains such as simulated air traffic control,[10], Algebra[11], Geometry[12], and health sciences[13].

Discussion

Shepherds, writing workshop participants, and learning system stakeholders (i.e., data mining experts, learning scientists, and educators) agreed that the design pattern’s solution could address the identified problem.

Data

According to an ASSISTments math online learning system data, boredom and gaming behavior correlated with problem difficulty (i.e., evidenced by answer correctness and number of hint requests).

Example

Many online learning systems were designed to adapt to students’ skill level. For example, SQL-Tutor provides students with problems on SQL programming that are appropriate to their level of knowledge[14]. Cognitive Tutor Algebra is another learning system that tracks student mastery on a particular knowledge component and provides them with algebra problems that are appropriate to their skill level[8]. The ASSISTments online learning system provides an IF-THEN-ELSE functionality that allows teachers to control the problem sets assigned to students based on their performance[15]. This can be used to identify students’ skill level and to assign the appropriate problem set.

A concrete example for applying this pattern is a teacher that encodes multiple math problem sets with varying levels of difficulty into an online learning system (e.g., single-digit subtraction, multiple-digit subtraction, subtraction by regrouping). As students answer questions in their homework, the online learning system would keep track of students’ progress to identify their skill level such as low (i.e., student makes mistakes ≥ 60% of the time), medium (i.e., student makes mistakes < 60% and ≥ 40% of the time) or high (i.e., student makes mistakes < 40% of the time). Based on students’ performance, the online learning system would provide the appropriate problem set so that it is more likely for students to receive questions that are fit for their skill level.

Related patterns

The Personalized Problems design pattern is an implementation of the Different Exercise Levels design pattern in online learning systems. The system should use the Just Enough Practice design pattern so that students reach mastery before switching to a more challenging problem set. The Differentiated Feedback or Worked examples design patterns may be used to facilitate learning.

References

- ↑ 1.0 1.1 Inventado, P.S. & Scupelli, P. (in press 2015). A Data-driven Methodology for Producing Online Learning System Design Patterns. In Proceedings of the 22nd Conference on Pattern Languages of Programs (PLoP 2015). New York:ACM.

- ↑ Sweller, J. (2004). Instructional design consequences of an analogy between evolution by natural selection and human cognitive architecture. Instructional science, 32(1-2), 9-31.

- ↑ Bjork, R.A. (1994). Memory and metamemory considerations in the training of human beings. In J. Metcalfe and A. Shimamura (Eds.), Metacognition: Knowing about knowing. (pp.185-205). Cambridge, MA: MIT Press.

- ↑ Piaget, J. (1952).The origins of intelligence. New York: International University Press.

- ↑ Bloom, B. S. (1974). Time and learning. American psychologist, 29(9), 682.

- ↑ Arnold, A., Scheines, R., Beck, J. E., and Jerome, B. (2005). Time and attention: Students, sessions, and tasks. In Proceedings of the AAAI 2005 Workshop Educational Data Mining (pp. 62-66).

- ↑ D’Mello, S., and Graesser, A. (2012). Dynamics of affective states during complex learning. Learning and Instruction, 22(2), 145-157.

- ↑ 8.0 8.1 Koedinger, K. R., and Aleven, V. (2007). Exploring the assistance dilemma in experiments with cognitive tutors. Educational Psychology Review, 19(3), 239-264.

- ↑ Vygotsky, L. S. (1962). Language and thought. Massachusetts Institute of Technology Press, Ontario, Canada.

- ↑ Salden, R. J., Paas, F., Broers, N. J., and Van Merriënboer, J. J. (2004) . Mental effort and performance as determinants for the dynamic selection of learning tasks in air traffic control training. Instructional science, 32(1-2), 153-172.

- ↑ Cen, H., Koedinger, K. R., and Junker, B. (2007). Is Over Practice Necessary?-Improving Learning Efficiency with the Cognitive Tutor through Educational Data Mining. Frontiers in Artificial Intelligence and Applications, 158, 511.

- ↑ Salden, R.J.C.M., Aleven, V., Schwonke, R. and Renkl, A. (2010). The expertise reversal effect and worked examples in tutored problem solving. Instructional Sicience, 38, 289--307.

- ↑ Corbalan, G., Kester, L. and van Merrieonboer, J.J.G. (2008). Selecting learning tasks: Effects of adaptation and shared control on learning efficiency and task involvement. Contemporary Educational Psycholoy, 33, 733--756.

- ↑ Mitrovic, A., and Martin, B. (2004). Evaluating adaptive problem selection. In Adaptive hypermedia and adaptive web-based systems (pp. 185-194). Springer Berlin Heidelberg.

- ↑ Donnelly, C.J. (2015). Enhancing Personalization Within ASSISTments (Doctoral dissertation).