Performance Sheet/OG

| Performance Sheet | |

| Contributors | Joseph Bergin, Christian Kohls, Christian Köppe, Yishay Mor, Michel Portier, Till Schümmer, Steven Warburton |

|---|---|

| Last modification | June 6, 2017 |

| Source | Bergin et al. (2015)[1][2]; Warburton et al. (2016)[3][4] |

| Pattern formats | OPR Alexandrian |

| Usability | |

| Learning domain | |

| Stakeholders | |

Also Known As: Assessment Contract (Assessment Contract), Rubric (Rubric), Score Sheet (Score Sheet)

Rate each Refined Criteria (Refined Criteria) on a sheet and aggregate the mark.

Context

You have an Assessment Criteria List (Assessment Criteria List) and found already Criteria Refinement (Criteria Refinement). You are assessing a complex task with multiple dimensions and no clear or obvious right or wrong answers.

Problem

Even when you have clearly defined Assessment Criteria (Assessment Criteria), applying those criteria to the task at hand is difficult for you, and thus even more so for your students.

Forces

Students like consistency and they want to understand what you do expect but how to account for unexpected high or low performance of students? Make student performances comparable. If you score live performing (e.g. presentation, team collaboration, programming activities) at later times you may have forgotten how each student or team performed.

Solution

Therefore, prepare a sheet (digital or printout), that lets you easily enter the performance for each of the finer level criteria.

Solution Details

Define a table where each row lists a criteria or sub-criteria, and columns note the indicators that will be used to award low, medium and high marks. For each criterion (and sub-criterion) fill in the relevant indicators. Make sure that all criteria are considered and documented. Have fields that let you aggregate the finer level criteria of Criteria Refinement (Criteria Refinement).

If used for numeric marking, you may want to specify the point bands for low, medium and high. You can also define the weighting scheme that combines the various criteria and sub-criteria.

By making the performance sheet available to students you support Transparent Assessment (Transparent Assessment). The students then can also use the Performance Sheet (Performance Sheet) for assessing themselves and peers. There is also the option to have students create such a performance sheet as part of their learning. However, you may consider to skip the criteria refinement to not overwhelm the students. You may also have Hidden Criteria (Hidden Criteria) that are not on published sheets.

Positive Consequences

Marking is easier and fairer. It becomes easier to compare between students. You don’t forget any criteria. Having a standardized performance sheet makes it easier to grade work by two independent reviewers. If you apply this in the context of {[Patternlink|Transparent Assessment}}, students can use the performance sheet as guidance in their actual work.

Negative Consequences

Be aware of mechanic scoring that does not take the individual cases and skills into account. Allow Multiple Right Ways (Multiple Right Ways) (or alternative paths) and a Diversity of Assessment (Diversity of Assessment) to avoid this negative consequence.

Example

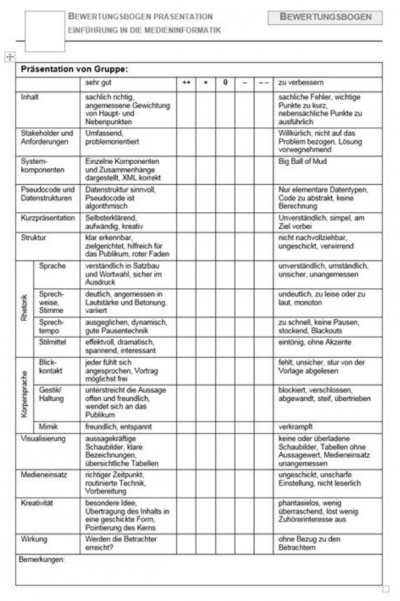

This printed out sheet was used to write down the detailed performances of teams presenting their project results. The presentation was one criteria of the overall performance.

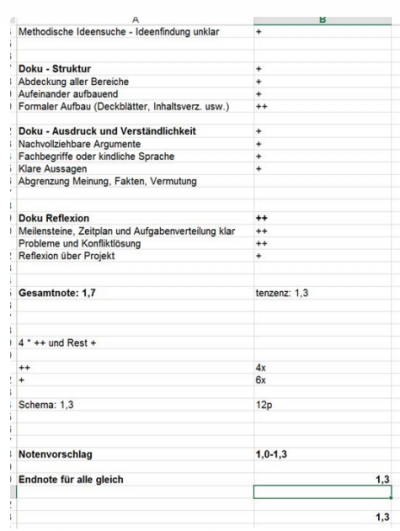

This Excel sheet was used to rate project results according to several criteria. Two tutors used the same sheet and rated the students independently.

The Layers Project templates included evaluation rubrics:

http://ilde.upf.edu/layers/v/bj0

http://ilde.upf.edu/layers/v/bjr

Assessment rubrics are popular on many courses and virtual learning environments (VLEs) now offer rubric tools as part of their inbuilt assessment and grading toolkits.

Related patterns

By providing a Performance Sheet (Performance Sheet), you avoid that Students Guess Criteria (Students Guess Criteria). It becomes more difficult to have Flexible Criteria (Flexible Criteria) and allow Multiple Paths (Multiple Paths). This pattern can is an Open Instruments of Assessment (Open Instruments of Assessment).

References

- ↑ Pattern published in Bergin, J., Kohls, C., Köppe, C., Mor, Y., Portier, M., Schümmer, T., & Warburton, S. (2015). Assessment-driven course design foundational patterns. In Proceedings of the 20th European Conference on Pattern Languages of Programs (EuroPLoP 2015) (p. 31). New York:ACM.

- ↑ Pattern also published in Bergin, J., Kohls, C., Köppe, C., Mor, Y., Portier, M., Schümmer, T., Warburton, S. (in press 2015). Assessment-Driven Course Design - Fair Play Patterns. In Proceedings of the 22nd Conference on Pattern Languages of Programs (PLoP 2015). New York:ACM

- ↑ Patlet also published in Warburton, S., Mor, Y., Kohls, C., Köppe, C., & Bergin, J. (2016). Assessment driven course design: a pattern validation workshop. Presented at 8th Biennial Conference of EARLI SIG 1: Assessment & Evaluation. Munich, Germany.

- ↑ Patlet also published in Warburton, S., Bergin, J., Kohls, C., Köppe, C., & Mor, Y. (2016). Dialogical Assessment Patterns for Learning from Others. In Proceedings of the 10th Travelling Conference on Pattern Languages of Programs (VikingPLoP 2016). New York:ACM.