Just Enough Practice

| Just Enough Practice | |

| Contributors | Paul Salvador Inventado, Peter Scupelli |

|---|---|

| Last modification | June 5, 2017 |

| Source | Inventado and Scupelli (in press 2015)[1]; Inventado and Scupelli (2015)[2] |

| Pattern formats | OPR Alexandrian |

| Usability | |

| Learning domain | General |

| Stakeholders | Students, Teachers, System developers |

| Production | |

| Data analysis | Student affect and interaction behavior in ASSISTments |

| Confidence | |

| Evaluation | EuroPLoP 2015 shepherding and writing workshop PLoP 2015 shepherding and writing workshop Talk:ASSISTments |

| Application | ASSISTments |

| Applied evaluation | ASSISTments |

Allow students to practice a skill until they master it then switch to another skill in order to avoid over practice[1][2].

Context

Content creators for Skill Builders design problem-solving activities that facilitate student mastery of a particular skill. Skill Builder problem sets require a student to achieve three correct answers consecutively in order to move on to new assignments while continuing to provide struggling students with extended practice.

Problem

Students cannot maximize their learning time if they are asked to practice skills they already mastered.

Forces

- Diminishing returns. Students learn more when they initially practice a skill, but eventually learn less as they master the skill through continued practice[3][4].

- Over practice. Students’ learning gains are almost the same when they either stop practicing a skill after mastery or over practice it[5].

- Limited resources. Student attention and patience is limited so they may switch to other tasks if they feel they are no longer learning from an activity[6][7].

Solution

Therefore, give students problems to practice a skill until they master it then give them new problems to practice a different skill. There are different ways to assess mastery such as students’ performance on a skill-mastery test, or a statistical model’s prediction of student mastery[8].

Consequences

Benefits

- Students get enough practice to master a skill.

- Students do not spend unnecessary time over-practicing a skill when it does not contribute to learning gains.

- Students make better use of their time by learning more skills in the allotted time

Liabilities

- The online learning system needs to support the measurement of skill mastery before the pattern can be applied.

- If skill mastery is incorrectly predicted, the learning system can cause over-practice on a skill or worse, prevent students from practicing a skill enough before mastery.

- Aside from creating problems to practice a particular skill, content creators will also need to prepare problems that target other skills students are asked to learn.

Evidence

Literature

An experiment conducted by Cen and colleagues[5] revealed that students had similar learning gains regardless if they over-practiced a skill or stopped practice after mastery. However, it took less time when students stopped practice after mastery. They suggest that students should switch to learning new skills instead of over practicing already mastered skills.

Discussion

Shepherds, writing workshop participants, and learning system stakeholders (i.e., data mining experts, learning scientists, and educators) agreed that over-practice could be common among online learning systems, and adapting problems to student mastery could address this problem.

Data

According to ASSISTments math online learning system data, frustration correlated with students repeatedly answering problems they already mastered.

Example

Some online learning systems measure students’ skill mastery to help control the amount of practice provided. For example, Cognitive Tutor Algebra and Cognitive Tutor Geometry are both online learning systems that track student mastery on a particular skill and provide students with problems that help them master that skill[9][10]. After the system detects that the student mastered a skill, it selects a different skill for the student to practice. The ASSISTments online learning system provides an IF-THEN-ELSE functionality that allows content creators to control the problems assigned to a student according to student performance [11]. This functionality allows students to be assigned problems that practice a particular skill and switch to another problem set after mastering the prior skill.

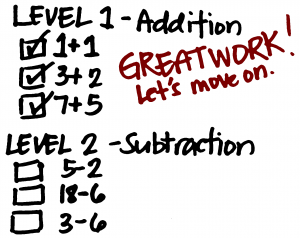

A concrete example of applying the pattern would be a teacher designing homework for her class. She can design a problem set that helps students practice decimal addition and another set for decimal subtraction. When students answer these problems, an online learning system may track the number of problems the student answers correctly. When a student answers three problems right in a row for example, then the student can advance to decimal subtraction problems. Otherwise, the student continues practicing decimal addition problems.

Related patterns

Make sure the system implements the Just Enough Practice design pattern when students are asked to use the Try It Yourself or Build and Maintain Confidence design patterns. When implementing the Just Enough Practice design pattern, move on to more challenging problems after mastery using the Personalized Problems design pattern. The Differentiated Feedback or Worked Examples design patterns may be used to facilitate learning.

References

- ↑ 1.0 1.1 Inventado, P.S. & Scupelli, P. (in press 2015). A Data-driven Methodology for Producing Online Learning System Design Patterns. In Proceedings of the 22nd Conference on Pattern Languages of Programs (PLoP 2015). New York:ACM.

- ↑ 2.0 2.1 Inventado, P.S. & Scupelli, P. (2015). Data-Driven Design Pattern Production: A Case Study on the ASSISTments Online Learning System. In Proceedings of the 20th European Conference on Pattern Languages of Programs (EuroPLoP 2015). New York:ACM.

- ↑ Rohrer, D. and Taylor, K. (2006). The effects of over-learning and distributed practice on the retention of mathematics knowledge. Applied Cognitive Psychology, 20, 1209--1224.

- ↑ Sweller, J. (2004). Instructional design consequences of an analogy between evolution by natural selection and human cognitive architecture. Instructional science, 32(1-2), 9-31.

- ↑ 5.0 5.1 Cen, H., Koedinger, K. R., and Junker, B. (2007). Is Over Practice Necessary?-Improving Learning Efficiency with the Cognitive Tutor through Educational Data Mining. Frontiers in Artificial Intelligence and Applications, 158, 511.

- ↑ Arnold, A., Scheines, R., Beck, J. E., and Jerome, B. (2005). Time and attention: Students, sessions, and tasks. In Proceedings of the AAAI 2005 Workshop Educational Data Mining (pp. 62-66).

- ↑ Bloom, B. S. (1974). Time and learning. American psychologist, 29(9), 682.

- ↑ Yudelson, M. V., Koedinger, K. R., and Gordon, G. J. (2013). Individualized bayesian knowledge tracing models. In Artificial Intelligence in Education (pp. 171-180). Springer Berlin Heidelberg.

- ↑ Aleven, V., Mclaren, B., Roll, I., and Koedinger, K. (2006). Toward meta-cognitive tutoring: A model of help seeking with a Cognitive Tutor. International Journal of Artificial Intelligence in Education, 16(2), 101-128.

- ↑ Koedinger, K. R., and Aleven, V. (2007). Exploring the assistance dilemma in experiments with cognitive tutors. Educational Psychology Review, 19(3), 239-264.

- ↑ Donnelly, C.J. (2015). Enhancing Personalization Within ASSISTments (Doctoral dissertation).